When using microservices (typically the go-to choice in system design interviews), it's common for one action in one service to trigger multiple other services to take action.

Consider an e-commerce website where we have separate services for orders, payments, inventory, email, and analytics. When someone clicks "buy", the order service processes the order but then the payment service needs to charge the card, the inventory service needs to reserve items, the email service needs to send confirmation, and analytics needs to record the purchase.

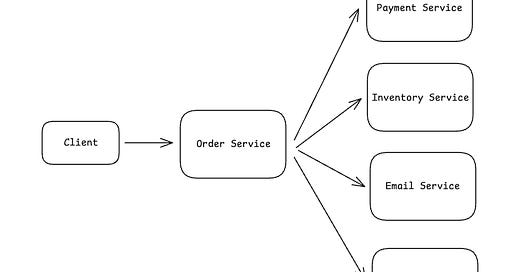

The most direct approach is having the order service make RPC or API calls to each service directly.

But, when the email service is down, the entire order fails even though email isn't critical. Worse, payment succeeds but then inventory fails, leaving you with a charged customer but no reserved items.

So, with this durability issue in mind, we can add message queues to decouple things. Now the order service sends messages to different queues, and each service processes them independently.

This helped with resilience. If email is down, messages just sit in its queue until the service comes back online. But you still have a problem, the order service needs to know about every system that cares about orders.

Several months later the team decides to add a new fraud detection service. The order service now needs to be modified to send messages to the fraud queue. Then, the marketing team wants to trigger campaigns on large orders. Another code change to the order service. Each new requirement forces you to modify and redeploy the order service since it is acting as a central orchestrator.

Lastly, coordination is a headache. Messages in different queues process at different speeds. A user might get their confirmation email before the payment actually processes. Or they check their order history and see conflicting information because the inventory service updated but analytics hasn't caught up yet.

The fundamental issue is coupling through knowledge. Whether using direct API calls or message queues, the order service must know about every downstream system and what they need. This creates a bottleneck where one team (orders) becomes responsible for integrating everyone else's requirements.

To solve each of these problems, we can use what is called an event-driven architecture.

The Solution: Event Driven Architecture (EDA)

Instead of the order service sending commands to other services, it announces what happened. That's the core shift with event-driven architecture. Services publish events - facts about things that occurred - and other services listen for the events they care about.

The key difference is that a message says "charge this card for $50" while an event says "order #123 was placed for $50". The first is a command that expects action. The second is just a fact. The order service doesn't care what happens next.

Now the payment service subscribes to `OrderPlaced` events and charges cards when it sees them. The inventory service also subscribes and reserves items. Same for the email service and so on. The order service has no idea these other services even exist. And it doesn't need to.

When to Use in Interviews

In reality I see more candidates introduce event driven architecture incorrectly than I see candidates miss it when it's actually needed.

My advice is to solve your problem as simply as possible. Most requests should be handled entirely within one service. When the client calls the order service to place an order, that service can handle everything internally. No need to coordinate with other services at all. Keep your services truly independent where you can.

That said, event-driven architecture does solve real problems. Here are a few places where it may make sense in an interview.

1. When you're dealing with financial data or audit requirements

Financial systems and anything touching money need immutable audit trails. Every transaction, refund, and dispute must be recorded forever - not just for good practice but for legal compliance. This is why Stripe publishes an event for every payment action. When a charge fails, when it's refunded, when it's disputed - each becomes an immutable event that forms their audit log.

The same applies to healthcare systems tracking patient records, trading platforms recording every order, or any system where "show me exactly what happened" is a regulatory requirement. If you can't delete or modify history, you need events.

2. When building platforms with external integrations

If you're designing a platform where third-party developers or unknown consumers need your data, events become important. Shopify is a good example, they publish events to webhooks because they can't possibly know what each merchant wants to do. One merchant sends orders to their accounting system, another updates inventory, a third triggers dropshipping, and a fourth runs custom analytics.

Shopify publishes the event once, and thousands of apps consume it in their own way. The alternative - having Shopify directly integrate with every possible merchant system - would be impossible to maintain. This pattern shows up whenever you have an ecosystem of integrations rather than a fixed set of internal services.

3. When you need real-time data pipelines

Real-time analytics and streaming data require events, but be careful with the word "real-time." If hourly or even 15-minute batches work, stick with them. True real-time means sub-second latency where the business genuinely can't wait.

Uber processes millions of ride events through Kafka specifically for dynamic pricing. They need to detect demand spikes instantly to trigger surge pricing as waiting even 5 minutes would mean lost revenue and poor driver distribution. Netflix uses event streaming for their content finance systems, but not because it's "cool" but because they need to track viewing instantly for content licensing costs that accrue per view.

This is where event-driven architecture meets stream processing. EDA is the architectural pattern where services communicate through discrete events rather than direct calls. Stream processing is the computational technique, continuously analyzing sequences of events as they arrive. Uber's pricing system uses both: event-driven communication between services (driver location updates, ride requests) and stream processing to aggregate those events into demand patterns. You can have stream processing without EDA (batch jobs that process event logs) and EDA without stream processing (simple event handlers that update databases), but they work well together for real-time systems.

4. When you need to replay or reprocess historical data

Traditional CRUD operations are destructive. Meaning, once you update a record, the old state is gone. Event sourcing keeps everything, letting you replay history with new logic. This becomes critical when you need to fix past mistakes or apply new rules retroactively.

Consider fraud detection at a payment processor. You develop new fraud rules that would have caught attacks from last month. With events, you replay last month's transactions through the new rules to identify previously missed fraud. With traditional architecture, that historical data is gone or transformed beyond use. Investment banks use this same pattern to recalculate risk when models change, replaying months of trades through updated algorithms.

What doesn't need EDA

Most system requirements that seem complex actually don't need events:

Service-to-service communication - If the order service needs to tell the payment service to charge a card and wait for the result, that's a synchronous API call. Don't complicate it with events unless you truly need fire-and-forget semantics.

Known integrations between fixed services - If three specific services need order data, just call their APIs. Events are for unbounded, unknown consumers, not a fixed set of internal services.

Scaling challenges - Regular horizontal scaling with load balancers works fine for most systems. Events don't magically make things scale better - they just move the complexity to managing event brokers and consumer lag.

Microservices architectures - Simply having microservices doesn't mean you need events. Most microservice communication should be synchronous API calls. Only add events when you need true decoupling or one of the specific patterns above.

Making the decision

In your interview, listen for these specific requirements:

- Compliance and audit trails that must be immutable

- Open platforms where external developers integrate

- Real-time processing with sub-second latency requirements

- Need to replay historical data with new logic

If you don't hear these specific needs, stick with simpler architectures. You can always add events later if requirements change, but you can't easily remove the complexity once it's there.

The best system designers know when not to use a pattern. For event-driven architecture, that's most of the time. Save it for the specific scenarios where its benefits outweigh the significant complexity it adds.

Conclusion

Event-driven architecture is a powerful pattern that solves some of the hardest problems in distributed systems. When you need immutable audit trails, external integrations, real-time streaming, or historical replay, events are often the only viable solution. But this power comes with significant complexity - eventual consistency, debugging challenges, schema evolution, and operational overhead that can overwhelm teams who adopt it too eagerly.

In interviews, demonstrate maturity by exploring simpler solutions first. When they describe microservices, start with synchronous APIs. When they mention scale, consider whether horizontal scaling solves the problem. Only reach for events when you hear those specific triggers: compliance requirements, unknown external consumers, sub-second latency needs, or replay requirements. Show that you understand what you're signing up for.

The best system designers know that great systems aren't built by applying every available pattern, but are built by choosing the right pattern for each problem. Event-driven architecture is a sharp tool in your toolbox. The ability to recognize when not to use it is just as important as knowing how to implement it. Master that restraint, and you'll design better systems than candidates who throw trendy patterns at every problem.

Changelog

People are constantly asking us what’s new with Hello Interview, so we’re going to keep a changelog here to keep you up-to-date. Since our last update:

New Features:

Run code in multiple languages - you can now try each of the problems in our DSA course in any of the major common languages

Fullscreen code editor - you can also expand the inline code editor to full screen

Global search - a new search bar in the top right makes it easy for you to find the content and features you’re looking for

New Content:

We’ve got more coming down the pipe that we’re excited to share in our next update!